Submission to the DRAGON leaderboard¶

Submissions to the DRAGON benchmark should be open-sourced and accompanied by a method description (optional during the development phase). Submissions may be kept private temporarily to facilitate e.g. manuscript submissions. For more info, please see here.

Figure: Evaluation method for the DRAGON benchmark. Challenge participants must provide all resources necessary to process the reports and generate predictions for the test set. Any processing of reports is performed on the Grand Challenge platform, without any interaction with the participant.

Creation of Your Solution¶

Please refer to the Development Guide for the algorithm setup.

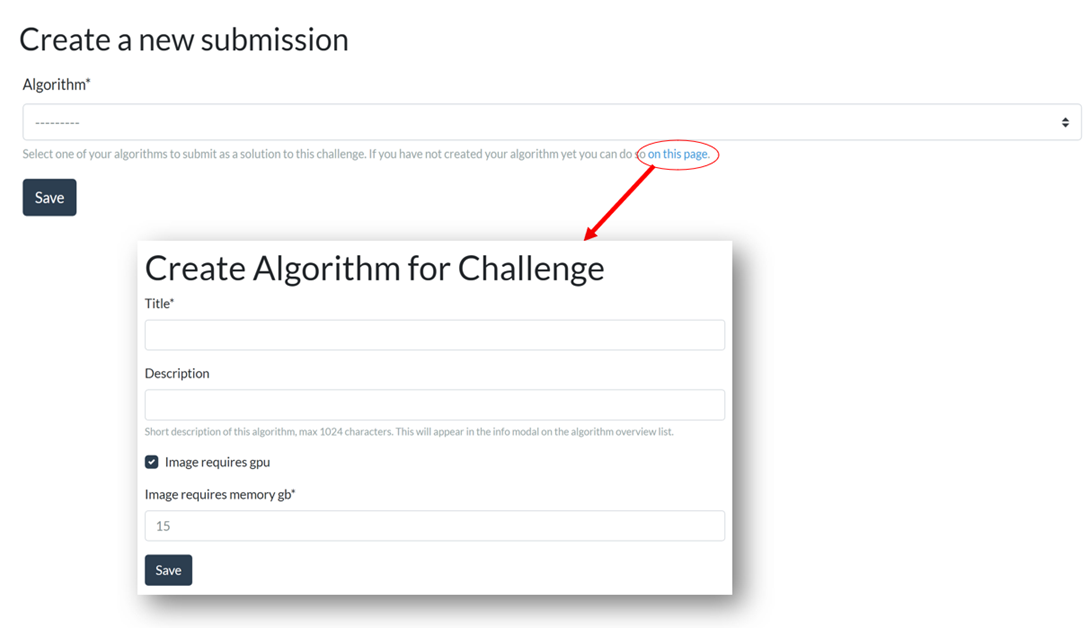

Adding Algorithm Container to Grand Challenge¶

Algorithm containers need to have the corresponding input/output interfaces of the DRAGON challenge to function. To have these set up automatically, navigate to the "Submit" page and complete the form "on this page":

Once complete, click the "Save" button. At this point, your GC algorithm has been created and you are on its homepage. Now, follow the steps here to link your forked DRAGON submission repository to your algorithm.

Once the algorithm is linked, tag your repository to start a build (see the documentation for more information).

It typically takes 20-60 minutes till your container is built and has

been activated (depending on the size of your container). After its

status is "Active", test your container with a synthetic dataset from

the

./test-input/ directory of the DRAGON submission repository by

navigating to the "Try-out Algorithm" page of your algorithm.

The required input interfaces for this challenge are:

- NLP Task Configuration

- NLP Training Dataset

- NLP Validation Dataset

- NLP Test Dataset

The required output interfaces for this challenge are:

- NLP Predictions Dataset

To learn more about GC input/output interfaces, visit https://grand-challenge.org/components/interfaces/algorithms/.

Submission to the DRAGON Challenge¶

Once you have your training+inference container uploaded as a fully-functional GC algorithm, you're now ready to make submissions to the challenge! Please follow each of the steps below to ensure a smooth submission process.

1. Make a submission to the Synthetic Leaderboard¶

Navigate to the "Submit" page and select the "Synthetic" phase. There, select your algorithm, and click "Save" to submit. If there are no errors and evaluation has completed successfully, your score will be up on the leaderboard (typically in less than 24 hours). This ensures your algorithm can handle all the task types in the DRAGON challenge and is a mandatory step! This way, we can identify potential issues with your algorithm submission early. This helps to keep costs low, allowing us to keep supporting the DRAGON challenge!

Note: the time limit for each of the nine synthetic tasks is shown on the submission page.

Did your algorithm fail? Please use the provided synthetic data to debug your algorithm (e.g., by running the test.sh file)

2. Make a submission to the Validation Leaderboard¶

Navigate to the "Submit" page and select the "Validation" phase. There, select your algorithm, and click "Save" to submit. If there are no errors and evaluation has completed successfully, your score will be up on the leaderboard (typically in less than 24 hours). This ensures your algorithm can handle all the tasks in the DRAGON challenge and is a mandatory step! This way, we can identify potential issues with your algorithm submission early. This helps to keep costs low, allowing us to keep supporting the DRAGON challenge!

Note: the time limit for each of the 28 tasks is shown on the submission page.

Did your algorithm fail? Please consider these next steps:

- You can reach out to the challenge organizers to ask for additional details about why the algorithm failed. See the challenge home page for the contact e-mail address. (Logs have to be checked manually to ensure no information from the reports is leaked, e.g., when printing a report that would crash the preprocessing pipeline.)

- You can debug algorithms on specific tasks through https://debug-dragon.grand-challenge.org [PENDING].

3. Make a submission to the Test Leaderboard¶

Congrats on creating a working algorithm! Now, let's find out how good it is! Navigate to the "Submit" page and select the "Test" phase. There, select your algorithm.

Note: the time limit for each of the 28 tasks is shown on the submission page.

This submission also requires two aspects to be checked by the challenge organizers (as specified in more detail here):

- The submission is open-source

- The method is described in the supporting document

Please provide this when submitting your algorithm. Without a valid open-source license and supporting document, the algorithm will not be processed and no score will be shown on the leaderboard!

With all information provided, click "Save" to submit. If there are no errors and evaluation has completed successfully, your algorithm will be processed (typically in less than 24 hours). Your score will be shown on the leaderboard after a manual verification of the open-source license and supporting document.

Invalid submissions will be removed and teams repeatedly violating any/multiple rules will be disqualified.

We look forward to your submissions to the DRAGON challenge. All the best! 👍