Data¶

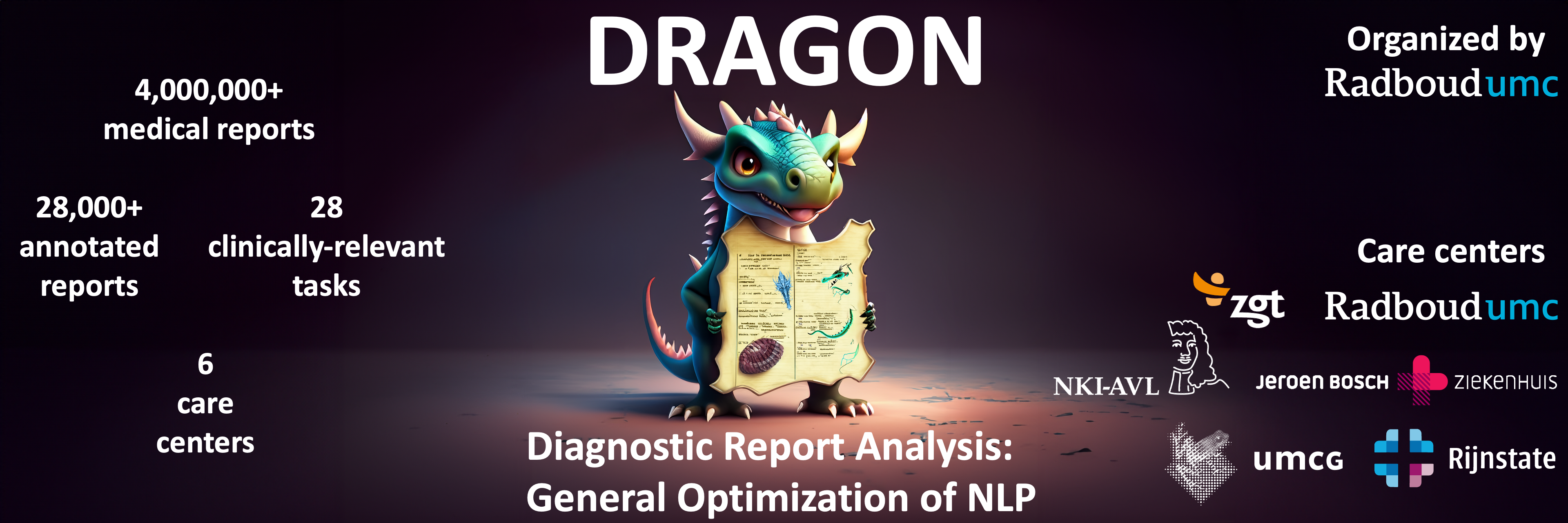

For the DRAGON benchmark, 28,824 clinical reports (22,895 patients) were included from five Dutch care centers (Radboud University Medical Center, Jeroen Bosch Ziekenhuis, University Medical Center Groningen, Rijnstate, and Antoni van Leeuwenhoek Ziekenhuis) of patients with a diagnostic or interventional visit between 1 January 1995 and 12 February 2024. For 27/28 tasks, all reports were manually annotated. For task 18, the 4803 development cases were automatically annotated using GPT-4, and the 172 testing cases were manually annotated.

For each task an example report is provided on the Tasks page and DRAGON sample reports repository.

Characteristics of the benchmark datasets are summarized in the manuscript.

For more information about the data, please check out the manuscript.

Data access¶

All clinical reports and associated annotations included in the DRAGON benchmark are securely stored on the Grand Challenge cloud platform. Participants have full functional access to the datasets for training, validation, and inference purposes through the Grand Challenge platform. Direct downloading or viewing of the clinical reports is not permitted, safeguarding patient confidentiality by design. Each task provides clearly defined training, validation, and testing sets. The training set is intended for model fine-tuning or implementing few-shot learning strategies. The validation set is available for hyperparameter optimization and model selection but must not be used as additional training data. To ensure robust evaluation, performance on the test set is measured through an automated, blinded assessment system that keeps the true labels hidden.

To facilitate algorithm development, synthetic datasets for all task types and example reports for each task are provided.

Participants can run their algorithms by submitting their code and associated resources through the Grand Challenge platform. Upon submission, algorithms are automatically executed in a secure, cloud-based environment without participant intervention. All computations, including training, validation, and inference, occur within this protected environment. Results and performance metrics are generated automatically, ensuring reproducibility and transparency. Detailed instructions and templates for preparing and submitting algorithms are available in the DRAGON baseline repository.