Evaluation

- ID

- 437ad325-2d6f-4d5d-9bd7-0f1ae22b3b2a

- Submission ID

- 89575b58-c5f5-4a8e-b57f-1cdf4878420a

- Method ID

- b1187f72-4ce4-4eed-9b5a-ffb9d76847b0

- Status

-

Succeeded

- User

-

joeran.bosma

joeran.bosma

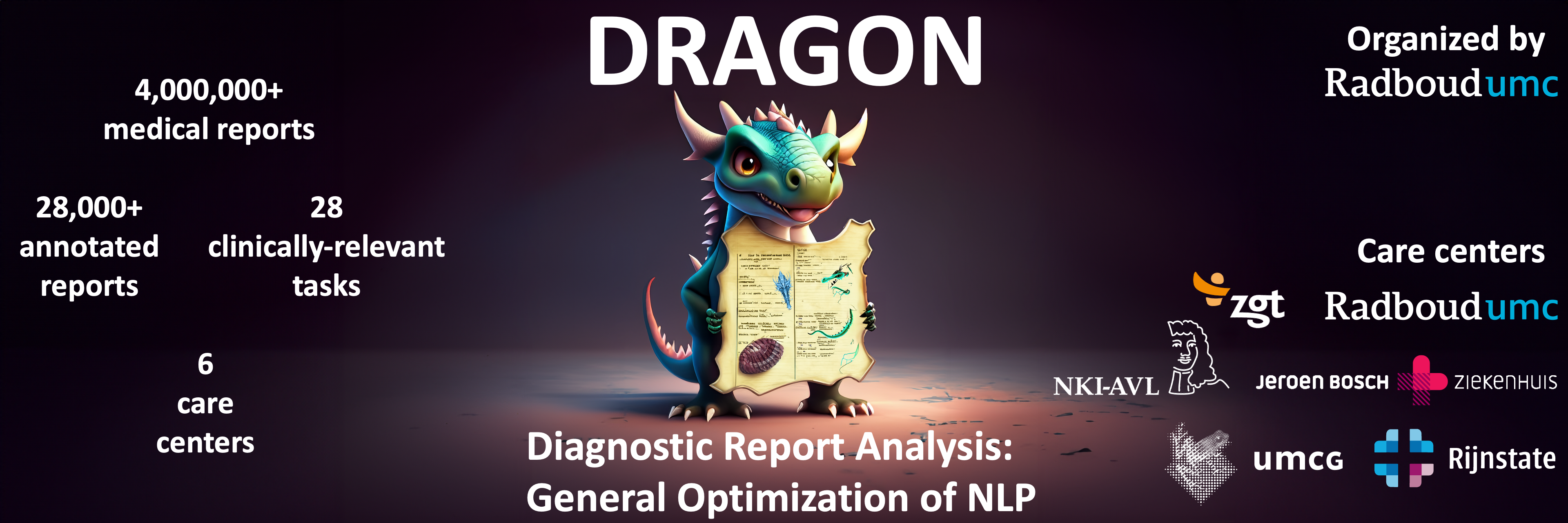

- Challenge

-

DRAGON

- Phase

-

Synthetic

- Algorithm

-

DRAGON CLTL MedRoBERTa.nl

(Image Version e3c010fc-ec5e-48fa-a56c-e05da85085a8

)

- Submission created

- June 20, 2024, 1:02 p.m.

- Result created

- June 20, 2024, 1:02 p.m.

- Position on leaderboard

-

43

Metrics

{

"case": {

"Task103_Example_mednli": {

"Task103_Example_mednli-fold0": 0.4878048780487805

},

"Task106_Example_sl_reg": {

"Task106_Example_sl_reg-fold0": 0.7786013716725521

},

"Task107_Example_ml_reg": {

"Task107_Example_ml_reg-fold0": 0.755771456134667

},

"Task108_Example_sl_ner": {

"Task108_Example_sl_ner-fold0": 0.20073782639755197

},

"Task109_Example_ml_ner": {

"Task109_Example_ml_ner-fold0": 0.07709102088078024

},

"Task102_Example_sl_mc_clf": {

"Task102_Example_sl_mc_clf-fold0": 0.28136086600564003

},

"Task105_Example_ml_mc_clf": {

"Task105_Example_ml_mc_clf-fold0": 0.667892181755398

},

"Task101_Example_sl_bin_clf": {

"Task101_Example_sl_bin_clf-fold0": 0.6929985337243402

},

"Task104_Example_ml_bin_clf": {

"Task104_Example_ml_bin_clf-fold0": 0.9732647735040079

}

},

"aggregates": {

"overall": {

"std": 0.0,

"mean": 0.5461692120137464

},

"Task103_Example_mednli": {

"std": 0.0,

"mean": 0.4878048780487805

},

"Task106_Example_sl_reg": {

"std": 0.0,

"mean": 0.7786013716725521

},

"Task107_Example_ml_reg": {

"std": 0.0,

"mean": 0.755771456134667

},

"Task108_Example_sl_ner": {

"std": 0.0,

"mean": 0.20073782639755197

},

"Task109_Example_ml_ner": {

"std": 0.0,

"mean": 0.07709102088078024

},

"Task102_Example_sl_mc_clf": {

"std": 0.0,

"mean": 0.28136086600564003

},

"Task105_Example_ml_mc_clf": {

"std": 0.0,

"mean": 0.667892181755398

},

"Task101_Example_sl_bin_clf": {

"std": 0.0,

"mean": 0.6929985337243402

},

"Task104_Example_ml_bin_clf": {

"std": 0.0,

"mean": 0.9732647735040079

}

}

}

joeran.bosma

joeran.bosma