Evaluation

- ID

- 59168cd6-d9bc-43ec-a88b-49308526ba48

- Submission ID

- c73b330e-18fa-4e71-a9e7-342c1d4ab3c5

- Method ID

- b1187f72-4ce4-4eed-9b5a-ffb9d76847b0

- Status

-

Succeeded

- User

-

joeran.bosma

joeran.bosma

- Challenge

-

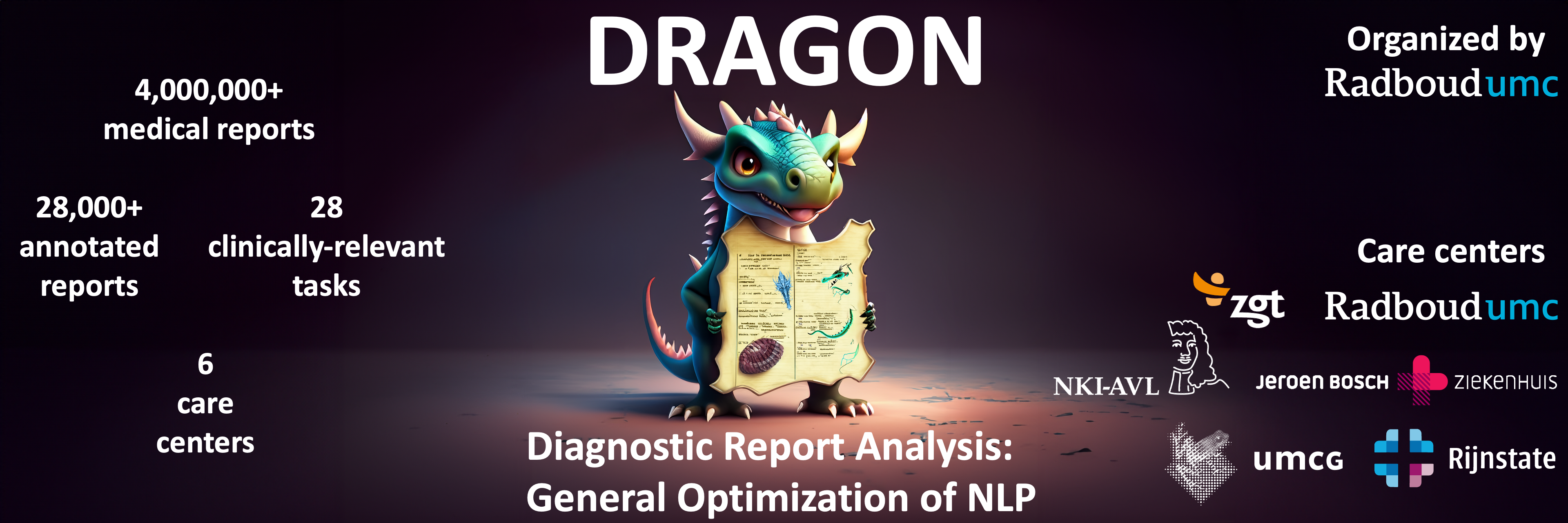

DRAGON

- Phase

-

Synthetic

- Algorithm

-

DRAGON RoBERTa Large General-domain

(Image Version b15581d1-f09d-4317-9a4f-176de945f37f

)

- Submission created

- May 13, 2024, 9:24 a.m.

- Result created

- May 13, 2024, 9:26 a.m.

- Position on leaderboard

-

30

Metrics

{

"case": {

"Task103_Example_mednli": {

"Task103_Example_mednli-fold0": 0.8433734939759037

},

"Task106_Example_sl_reg": {

"Task106_Example_sl_reg-fold0": 0.8125208744102831

},

"Task107_Example_ml_reg": {

"Task107_Example_ml_reg-fold0": 0.7581392628131182

},

"Task108_Example_sl_ner": {

"Task108_Example_sl_ner-fold0": 0.271738809277589

},

"Task109_Example_ml_ner": {

"Task109_Example_ml_ner-fold0": 0.18439399618018018

},

"Task102_Example_sl_mc_clf": {

"Task102_Example_sl_mc_clf-fold0": 0.6424970208085068

},

"Task105_Example_ml_mc_clf": {

"Task105_Example_ml_mc_clf-fold0": 0.7595709875728873

},

"Task101_Example_sl_bin_clf": {

"Task101_Example_sl_bin_clf-fold0": 0.3418255131964809

},

"Task104_Example_ml_bin_clf": {

"Task104_Example_ml_bin_clf-fold0": 0.9897470950102529

}

},

"aggregates": {

"overall": {

"std": 0.0,

"mean": 0.6226452281383558

},

"Task103_Example_mednli": {

"std": 0.0,

"mean": 0.8433734939759037

},

"Task106_Example_sl_reg": {

"std": 0.0,

"mean": 0.8125208744102831

},

"Task107_Example_ml_reg": {

"std": 0.0,

"mean": 0.7581392628131182

},

"Task108_Example_sl_ner": {

"std": 0.0,

"mean": 0.271738809277589

},

"Task109_Example_ml_ner": {

"std": 0.0,

"mean": 0.18439399618018018

},

"Task102_Example_sl_mc_clf": {

"std": 0.0,

"mean": 0.6424970208085068

},

"Task105_Example_ml_mc_clf": {

"std": 0.0,

"mean": 0.7595709875728873

},

"Task101_Example_sl_bin_clf": {

"std": 0.0,

"mean": 0.3418255131964809

},

"Task104_Example_ml_bin_clf": {

"std": 0.0,

"mean": 0.9897470950102529

}

}

}

joeran.bosma

joeran.bosma