Evaluation

- ID

- 83a3d36a-00ea-44d2-8b8b-f01d6fd7ecd1

- Submission ID

- 4fa47532-3f56-4306-a7a6-e2494c6fc32e

- Method ID

- b1187f72-4ce4-4eed-9b5a-ffb9d76847b0

- Status

-

Succeeded

- User

-

joeran.bosma

joeran.bosma

- Challenge

-

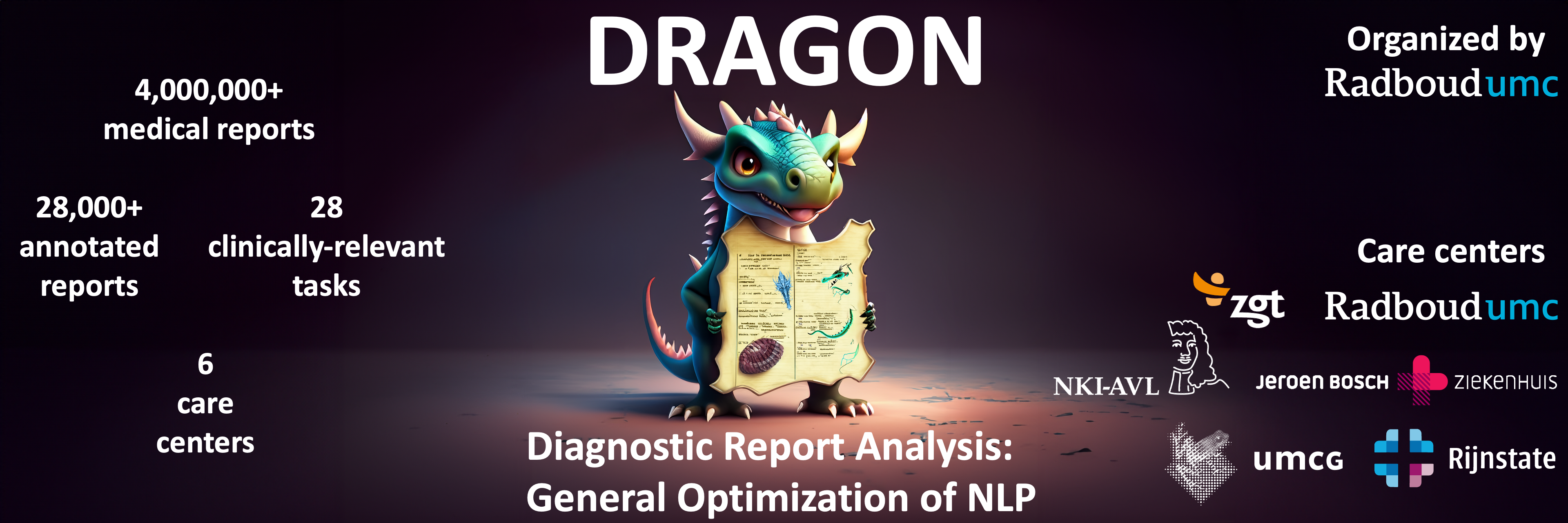

DRAGON

- Phase

-

Synthetic

- Algorithm

-

DRAGON BERT Base Domain-specific

(Image Version 0c7f7895-d269-4918-9589-a6401f0cc26b

)

- Submission created

- May 10, 2024, 11:42 a.m.

- Result created

- May 10, 2024, 11:42 a.m.

- Position on leaderboard

-

32

Metrics

{

"case": {

"Task103_Example_mednli": {

"Task103_Example_mednli-fold0": 0.5041322314049588

},

"Task106_Example_sl_reg": {

"Task106_Example_sl_reg-fold0": 0.7057749206091717

},

"Task107_Example_ml_reg": {

"Task107_Example_ml_reg-fold0": 0.756584775534297

},

"Task108_Example_sl_ner": {

"Task108_Example_sl_ner-fold0": 0.19672900759720513

},

"Task109_Example_ml_ner": {

"Task109_Example_ml_ner-fold0": 0.18802868932182515

},

"Task102_Example_sl_mc_clf": {

"Task102_Example_sl_mc_clf-fold0": 0.5165039516503951

},

"Task105_Example_ml_mc_clf": {

"Task105_Example_ml_mc_clf-fold0": 0.7198099295428477

},

"Task101_Example_sl_bin_clf": {

"Task101_Example_sl_bin_clf-fold0": 0.7619134897360704

},

"Task104_Example_ml_bin_clf": {

"Task104_Example_ml_bin_clf-fold0": 0.9512753992419064

}

},

"aggregates": {

"overall": {

"std": 0.0,

"mean": 0.5889724882931865

},

"Task103_Example_mednli": {

"std": 0.0,

"mean": 0.5041322314049588

},

"Task106_Example_sl_reg": {

"std": 0.0,

"mean": 0.7057749206091717

},

"Task107_Example_ml_reg": {

"std": 0.0,

"mean": 0.756584775534297

},

"Task108_Example_sl_ner": {

"std": 0.0,

"mean": 0.19672900759720513

},

"Task109_Example_ml_ner": {

"std": 0.0,

"mean": 0.18802868932182515

},

"Task102_Example_sl_mc_clf": {

"std": 0.0,

"mean": 0.5165039516503951

},

"Task105_Example_ml_mc_clf": {

"std": 0.0,

"mean": 0.7198099295428477

},

"Task101_Example_sl_bin_clf": {

"std": 0.0,

"mean": 0.7619134897360704

},

"Task104_Example_ml_bin_clf": {

"std": 0.0,

"mean": 0.9512753992419064

}

}

}

joeran.bosma

joeran.bosma