Evaluation

- ID

- 95b7a77b-f079-4942-897d-0c2ccf427033

- Submission ID

- 520c3d05-ed0d-4e2b-b8ea-1b6fd7ada295

- Method ID

- b1187f72-4ce4-4eed-9b5a-ffb9d76847b0

- Status

-

Succeeded

- User

-

joeran.bosma

joeran.bosma

- Challenge

-

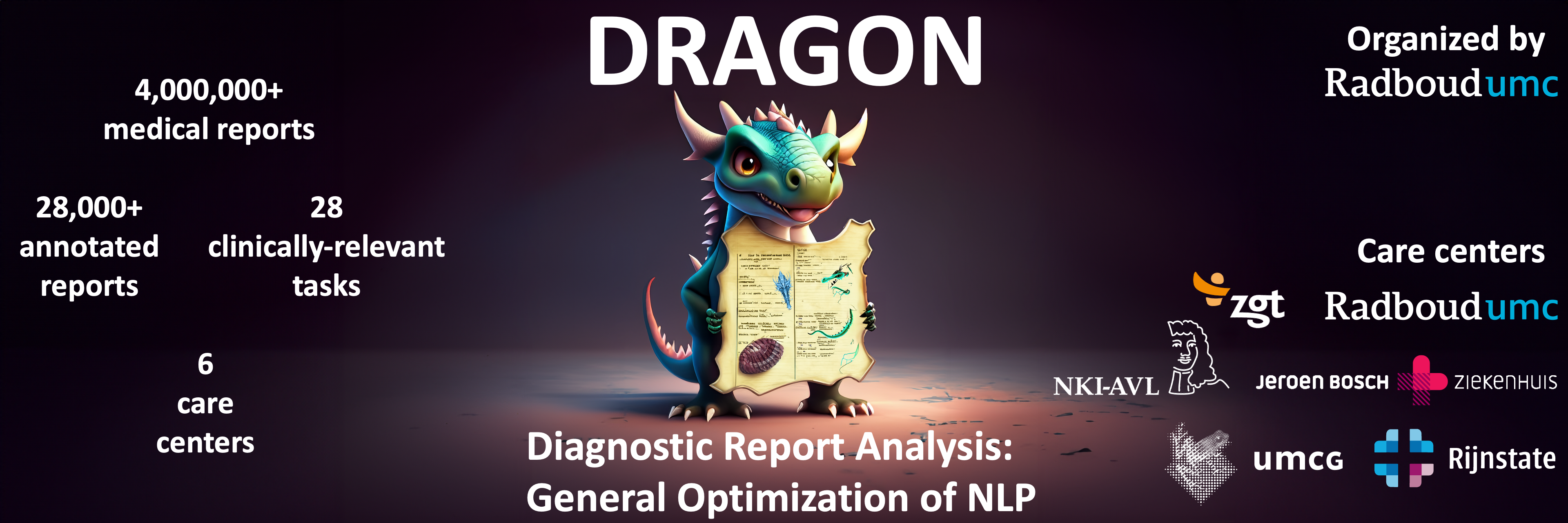

DRAGON

- Phase

-

Synthetic

- Algorithm

-

DRAGON RoBERTa Base Domain-specific

(Image Version a46a80d5-ed0d-48ec-be17-55efdf293b42

)

- Submission created

- May 10, 2024, 7:52 a.m.

- Result created

- May 10, 2024, 7:52 a.m.

- Position on leaderboard

-

35

Metrics

{

"case": {

"Task103_Example_mednli": {

"Task103_Example_mednli-fold0": 0.4444444444444444

},

"Task106_Example_sl_reg": {

"Task106_Example_sl_reg-fold0": 0.7456922655412466

},

"Task107_Example_ml_reg": {

"Task107_Example_ml_reg-fold0": 0.740370732606521

},

"Task108_Example_sl_ner": {

"Task108_Example_sl_ner-fold0": 0.3016116808437343

},

"Task109_Example_ml_ner": {

"Task109_Example_ml_ner-fold0": 0.3244219450240466

},

"Task102_Example_sl_mc_clf": {

"Task102_Example_sl_mc_clf-fold0": 0.21440550798834845

},

"Task105_Example_ml_mc_clf": {

"Task105_Example_ml_mc_clf-fold0": 0.6373916576381365

},

"Task101_Example_sl_bin_clf": {

"Task101_Example_sl_bin_clf-fold0": 0.7487170087976539

},

"Task104_Example_ml_bin_clf": {

"Task104_Example_ml_bin_clf-fold0": 0.9264121046417697

}

},

"aggregates": {

"overall": {

"std": 0.0,

"mean": 0.5648297052806557

},

"Task103_Example_mednli": {

"std": 0.0,

"mean": 0.4444444444444444

},

"Task106_Example_sl_reg": {

"std": 0.0,

"mean": 0.7456922655412466

},

"Task107_Example_ml_reg": {

"std": 0.0,

"mean": 0.740370732606521

},

"Task108_Example_sl_ner": {

"std": 0.0,

"mean": 0.3016116808437343

},

"Task109_Example_ml_ner": {

"std": 0.0,

"mean": 0.3244219450240466

},

"Task102_Example_sl_mc_clf": {

"std": 0.0,

"mean": 0.21440550798834845

},

"Task105_Example_ml_mc_clf": {

"std": 0.0,

"mean": 0.6373916576381365

},

"Task101_Example_sl_bin_clf": {

"std": 0.0,

"mean": 0.7487170087976539

},

"Task104_Example_ml_bin_clf": {

"std": 0.0,

"mean": 0.9264121046417697

}

}

}

joeran.bosma

joeran.bosma